Previously I have written about creating a Kubernetes cluster on AWS as well as on bare metal servers

Both of these setups require you to expose your application to the public and that comes with cost implications. Let me start with a little background on how application deployment work.

A Pod is the lowest compute unit in Kubernetes running one or more containers. Pods have their own cluster-private IP address, which means that containers within a Pod can all reach each other’s ports on localhost, and all pods in a cluster can see each other. However, Pods can die, whether crashing, rolling update or being removed due to down scaling, in which cases, their IP addresses change. Therefore directly accessing pods is not a reliable way to access the application.

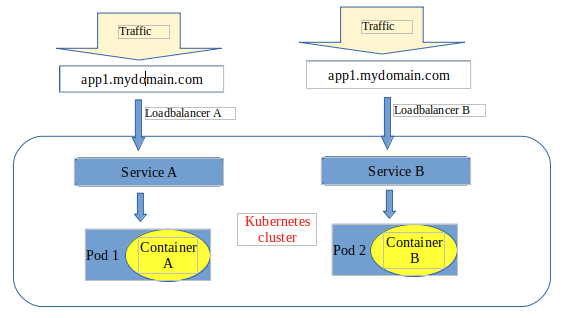

The most common way of exposing pods/ services publicly is using a loadbalancer. When you are using the cloud (GCP, AWS, Azure, Digital Ocean etc) when you run a deployment with type loadbalancer, an external access link is automatically created. For GCP and Digital Ocean, this will be a public IP eg: 80.34.53.23 while for AWS it will be something like acf86ld3bff1fd611e9as7580a43b4esb515d-792533560.us-east-1.elb.amazonaws.com .

These end points can be used to configure DNS record in cloudflare or any other DNS management service you are using. However the loadbalancers come at a cost that increases as you deploy more applications since each application requires it’s dedicated loadbalancer. The figure below shows on high level how the deployment looks like.

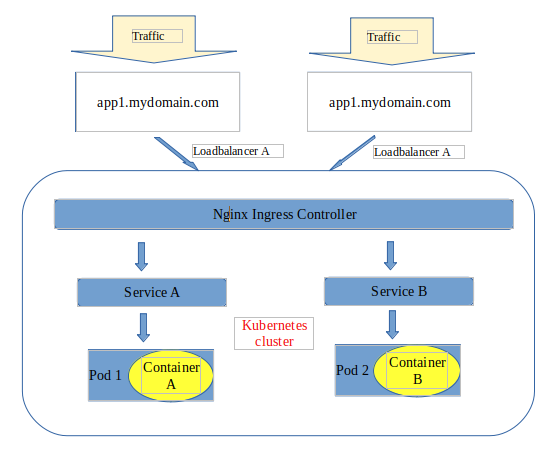

This is where Ingress controllers becomes a savior. There are a couple of options to choose from Traefik, HAProxy and Kubernetes Ingress. In this blog I will focus on Kubernetes Nginx Ingress. The diagram below shows a complete setup of nginx ingress controller on high level.

Notice we are using one loadbalancer to configure the domain names irrespective the number of apps deployed. This loadbalancer points to the ingress controller which then decides where to direct traffic to based on the configured ingress rules and annotations.

The possibility of deploying multiple applications using a single loadbalancer makes nginx ingress very suitable and a cost savior unlike using a single loadbalancer for every application you deploy. I know now you are wondering what happens when traffic spikes up and the loadbalancer is overwhelmed?. In kubernetes you can scale the ingress controller and have multiple pods running, lets say 10 instances. This means kubernetes will distribute incoming traffic in the different pods in a round robin manner hence handling the traffic appropriately.

Implementation

Configuring nginx ingress controller is easy and fast thanks to their awesome documentation which is very elaborate especially for cloud deployment.

Extra tweaking might be necessary for a bare metal setup since a loadbalancer option is not available. Nodeport is the only viable and stable option to expose your nginx ingress on a public ip.